Executive Comparison of AI Governance Frameworks for Risk & Compliance

Introduction

Artificial Intelligence (AI) is becoming integral to enterprise operations and risk management, including emerging Autonomous IRM (Integrated Risk Management) initiatives where AI agents autonomously assist in identifying and managing risks. Executives and boards need to ensure such AI deployments are trustworthy, compliant, and aligned with business objectives. Several frameworks have emerged to govern AI risk and compliance. Below is a comparison of three key frameworks – ISO/IEC 42001 (the new AI Management System standard), the EU AI Act (forthcoming European regulation), and the NIST AI Risk Management Framework (RMF) (a U.S. voluntary guideline) – focusing on what executives should understand, monitor, and prioritize in each.

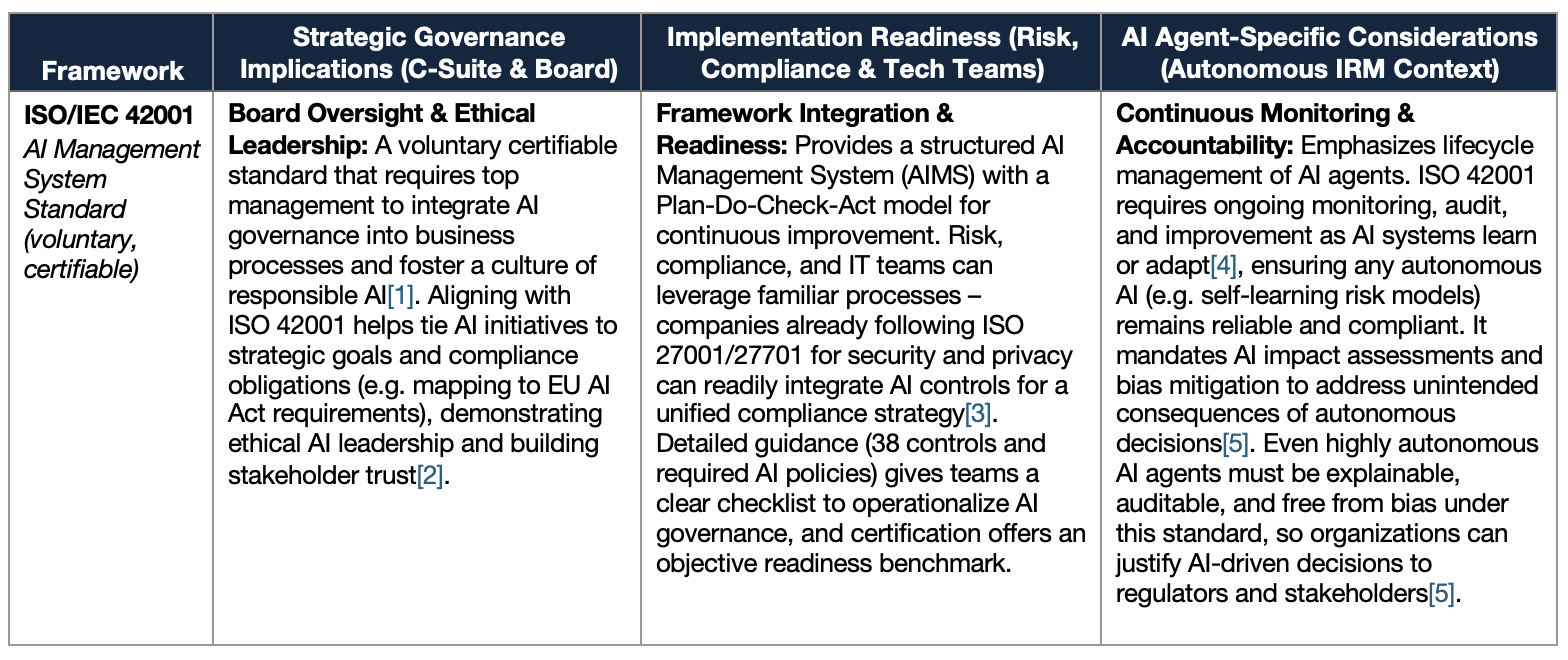

Comparison Matrix: ISO 42001 vs. EU AI Act vs. NIST AI RMF

Operational Use Cases Applying the Frameworks

To illustrate how these frameworks guide real-world risk management, consider the following scenarios in technology risk, operational risk, and GRC domains:

Technology Risk Management (AI in Cybersecurity): A global bank deploys a generative AI system to monitor network traffic and flag cyber threats in real-time. This introduces technology risk – e.g. the AI might produce false positives that disrupt operations or false negatives that miss attacks. Using the NIST AI RMF, the bank’s technology risk team establishes robustness and accuracy controls: they regularly test the AI with adversarial scenarios and ensure it’s tuned to minimize errors[15].

ISO 42001’s continuous monitoring process is applied to track the AI’s performance over time and feed back improvements[4]. While the EU AI Act may not explicitly classify an internal cybersecurity AI as “high-risk”, the CISO and risk officers align with its principles of oversight and transparency – for example, documenting the AI’s decision logic and having humans ready to intervene on critical security decisions. This combined approach ensures the AI augments security without compromising stability or compliance.

Operational Risk Management (AI in a Core Business Process): An insurance company uses an AI system to automate claims processing – assessing claims for potential fraud or errors and approving straightforward cases. This reduces manual work but poses operational risks: biased decisions could alienate customers or processing errors could cause financial loss. Under the EU AI Act, this use-case is likely “high-risk” (it impacts individuals’ access to services and could affect their rights), so the company implements the Act’s required controls: a formal risk management system, human oversight for contested claims, and rigorous data quality checks on the training data[8][9].

To operationalize these, they turn to ISO 42001 – conducting an AI impact assessment and instituting bias mitigation measures as prescribed by the standard (ensuring the model’s decisions are fair and explainable)[16]. NIST’s AI RMF further guides the IT and operations teams on validation testing (to verify the AI’s fraud flags are reliable) and incident response (procedures if the AI fails or flags too many false positives). Through these frameworks, the company’s executives gain confidence that the AI-driven process is under control: it improves efficiency but with proper checkpoints to prevent operational surprises.

Governance, Risk & Compliance (GRC) – Autonomous IRM Assistant: A large enterprise’s compliance department deploys an AI agent to autonomously scan employee communications and transactions for potential policy violations or risks. This “autonomous IRM” agent can identify issues (e.g. insider trading risks, compliance lapses) and generate risk reports without human prompting. The upside is proactive risk insight; the downside is the agent might err or overstep (raising privacy concerns or false alarms). To govern this, management applies NIST’s AI RMF as a foundation – ensuring there is clear accountability and human oversight for the AI’s operation. A compliance officer is assigned to review the AI’s alerts and can override or correct them, aligning with NIST and ISO guidance that AI outputs remain subject to human judgment[11][5].

The organization’s ISO 42001-based AI management system requires that the agent’s algorithms are auditable and transparent, so internal audit can review how the AI is making decisions[5]. Although an internal compliance tool like this may not fall under a specific law, the company voluntarily follows EU AI Act principles – maintaining documentation, transparency to affected employees, and periodic reviews of the AI’s impact on fairness. This gives the board and regulators confidence that even an autonomous compliance tool is operating within the firm’s risk appetite and ethical standards. For example, if the AI flags an employee unfairly, human compliance managers investigate, addressing any bias in the AI’s logic before it causes harm. The result is a faster, AI-assisted GRC process that still aligns with the governance and accountability expectations set by these frameworks.

Key Takeaway for Executives: Across these examples, the common theme is the need for top-down governance and cross-functional readiness when introducing AI into risk domains. Executives should prioritize establishing an AI governance framework (whether via ISO 42001 certification, compliance programs for EU AI Act, or adopting NIST RMF) that fits their organization. This means setting clear roles and responsibilities (e.g. board oversight, risk owners), ensuring technical and compliance teams are trained on AI-specific controls, and continuously monitoring AI systems in operation. By doing so, the C-suite and board can confidently harness AI and even autonomous IRM tools to enhance risk management, while maintaining control, compliance, and trust in these powerful systems.

References:

[1] ISO 42001: paving the way for ethical AI | EY - US

https://www.ey.com/en_us/insights/ai/iso-42001-paving-the-way-for-ethical-ai

[2] [3] [4] [5] [13] [16] ISO/IEC 42001: a new standard for AI governance

https://kpmg.com/ch/en/insights/artificial-intelligence/iso-iec-42001.html

[6] [7] [8] [9] Preparing for the EU AI Act: A C-Suite Action Plan - EU AI Act

https://www.euaiact.com/blog/c-suite-action-plan-preparing-for-eu-ai-act

[10] [11] [14] [15] NIST AI Risk Management Framework: A simple guide to smarter AI governance

https://www.diligent.com/resources/blog/nist-ai-risk-management-framework

[12] AI Risk Management Framework | NIST

https://www.nist.gov/itl/ai-risk-management-framework