Governance and Management: The Distinction That Determines Risk Effectiveness

Most risk programs do not fail because they lack governance; they fail because governance never becomes execution. IRM is the bridge that converts expectations into measurable outcomes spanning compliance, assurance, performance, and resilience. That gap is often created by language. Executives and risk leaders use “governance” and “management” interchangeably, but the enterprise consequences are not interchangeable. When organizations attempt to manage through policy or attempt to govern through procedures, accountability blurs, and risk outcomes deteriorate. This confusion is no longer a niche concern. It is now a primary constraint on enterprise risk capability as organizations scale cybersecurity, privacy, operational resilience, third-party risk, model risk, and AI.

Wheelhouse Advisors’ position is simple:

Governance defines expectations. Management delivers outcomes.

That distinction is the structural difference between defining what must be true and making it true. It also explains why many risk programs can look mature on paper, yet remain fragile in practice.

The hidden failure mode behind many “integrated” programs

A primary reason many legacy GRC programs plateaued is that “integration” was often interpreted as collapsing governance and management into a single compliance system. In that design, integration becomes a workflow and a repository, not an operating model.

The result is predictable. Organizations build strong artifacts (policies, control libraries, attestations, and audit-ready evidence), but struggle to deliver consistent outcomes (continuous validation, timely response, measurable risk reduction, and repeatable performance under stress). This is not a critique of the GRC concept. It is a critique of a typical implementation pattern where program mechanics substitute for operational management.

If leaders want an early warning sign, it is this. When teams cannot clearly distinguish expectations from execution, they begin to treat documentation as the work, and assurance becomes backward-looking by default.

IRM as the bridge between expectations and outcomes

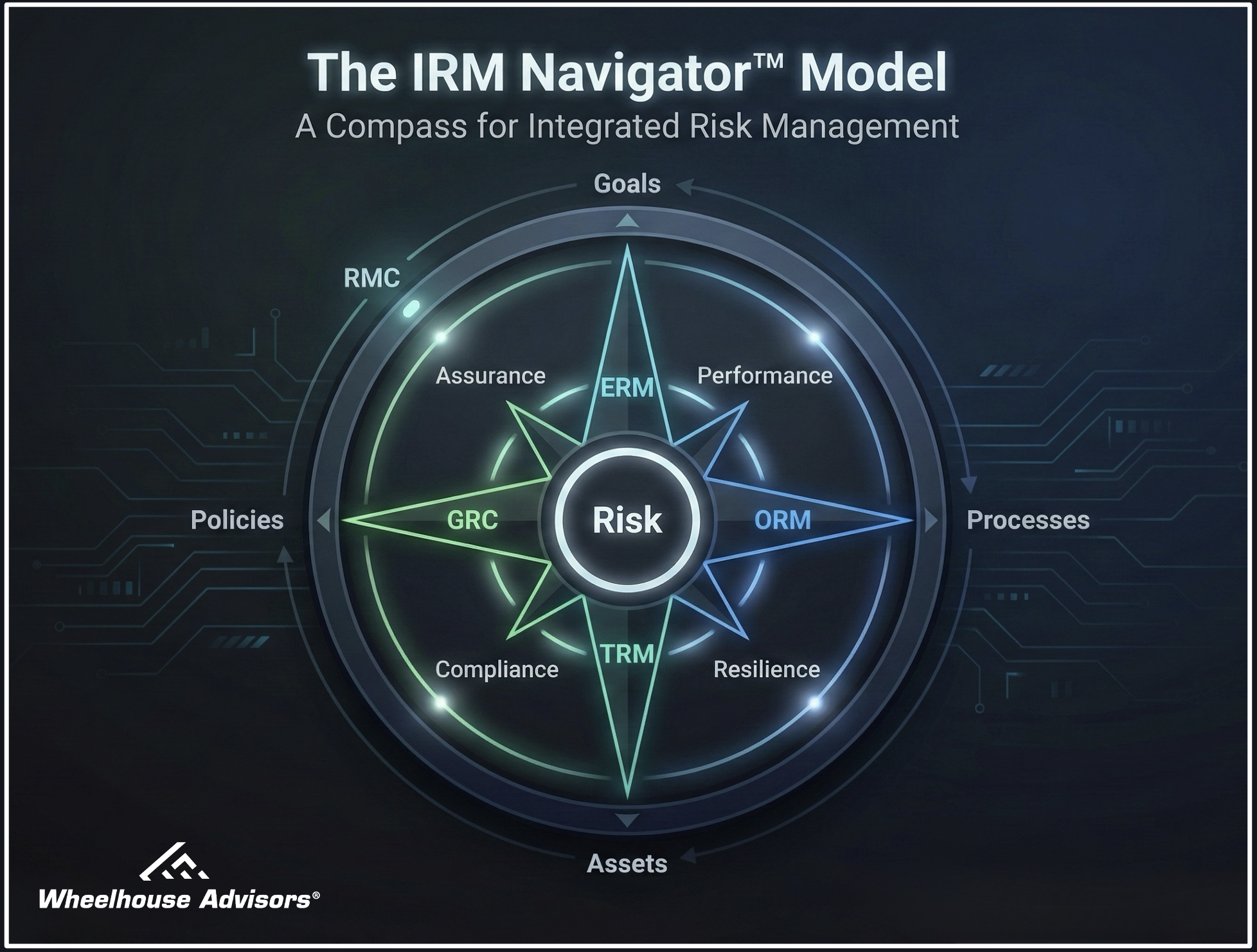

The IRM Navigator™ Model clarifies how enterprises scale beyond that limitation. It frames integrated risk management as the operating model that bridges governance-defined expectations into management execution across four risk domains and four enterprise objectives.

The objectives form a continuous loop:

Performance

Resilience

Assurance

Compliance

Each risk domain functions as a practical bridge between two objectives:

GRC bridges Compliance and Assurance.

ERM bridges Assurance and Performance.

ORM bridges Performance and Resilience.

TRM bridges Resilience and Compliance.

This is the most critical implication: GRC is essential, but it is one bridge, not the whole system. IRM unifies the four bridges so that expectations move reliably into execution, and execution outcomes flow back into assurance and decision-making.

Stated plainly, an organization can have strong GRC and still struggle to manage risk at enterprise speed if ERM, ORM, and TRM remain isolated from the outcomes loop.

Why AI exposes the distinction faster than any other domain

The phrase “AI governance” is widely used as if it were a complete operating model. In practice, many efforts labeled AI governance stop at expectation-setting: principles, standards, committee reviews, checklists, and documentation of intended behavior. Those activities are necessary, but they are not sufficient to manage AI at scale.

AI management requires execution capability: validation, testing, performance monitoring, drift detection, bias assessment, incident response, and system-level assurance. None of those outcomes appear simply because an organization published principles or created a committee. They appear when expectations are translated into operational controls and continuously verified in production.

AI accelerates the cost of confusion because models, data, and environments change. Without a clear bridge between expectations and execution, organizations will produce artifacts faster than they can build confidence.

Practical guidance for leaders

Most organizations do not need a new acronym. They need clarity on responsibility and on the instrumentation of execution.

A pragmatic starting point is to ask three questions:

1. Can we state governance expectations in a testable way?

If expectations cannot be operationalized (thresholds, decision rights, control criteria), execution cannot reliably deliver them.

2. Do we manage risk through continuous signals, not periodic paperwork?

Mature programs reduce reliance on manual, episodic assessments and increase monitoring, validation, and automated evidence.

3. Are the four bridges working together, or operating as separate programs?

If GRC is strong but disconnected from ERM prioritization, ORM execution, or TRM control validation, “integration” remains a label rather than an outcome.

Organizations that scale risk capability will be those that keep governance and management distinct, then build an operating model that links them across the four objectives through the four domain bridges.

Risk Event Forecast

Predicted risk event (next 12 to 18 months, 65% probability): Many programs branded as “AI governance” will stall at principles, review committees, and documentation, producing compliance artifacts but limited operational control evidence (validation, monitoring, drift response, incident handling). Strategic change: Buyer expectations will shift toward execution instrumentation and measurable assurance, elevating continuous testing, monitoring, and response as primary selection criteria for platforms and services.

References

1. Wheelhouse Advisors, IRM Navigator™ Model and IRM Navigator™ Curve

2. Wheelhouse Advisors, The RTJ Bridge (premium research and editorials)

3. NIST, Artificial Intelligence Risk Management Framework (AI RMF 1.0), Jan 2023

4. ISO/IEC, ISO/IEC 42001:2023 Artificial Intelligence Management System

5. European Union, Regulation (EU) 2024/1689 (Artificial Intelligence Act), EUR-Lex